Abstract

- https://arxiv.org/pdf/2403.19046

- Recent works often overlook the importance of temporal localization

- The key aspects that limit the temporal localization abilities are:

- time representation

- architecture

- data

- Hence, new architecture, LITA, is proposed in this paper which is capable of:

- leveraging time tokens to better represent time in videos

- handling SlowFast tokens to capture temporal information at fine resolution

- addressing challenges from newly introduced Reasoning Temporal Localization dataset

→ but actually this model and dataset do not handle temporal object localization problem. What this paper focus on is only related to actions.

Motivation

There are not enough datasets and architectures to accurately specify temporal location of an event. Previous works such as Video-LLaMA only sample a few frames thoughout the video, which is not sufficient number to temporally localize events.

Therefore, newly introduced architecture, LITA(Language Instructed Temporal-localization Assistant) efficiently handle such scenarios.

The first important design of LITA is to use relative representation for time. e.g. first 10% instead of 01:22.

The second one is densely sampled input frames from videos. But as we may know, sampling multiple tokens from each frame may results in intractable number of tokens. To address this issue, new tokens--fast tokens and slow tokens are introduced.

To instruct-tune this model, new task and dataset -- Reasoning Temporal Localization are introduced. It's simply a temporal localization problem from a given video according to the text instruction which may be implicitly refer the action or object.

Methods

The architecture is quite simple. As you can see, overall architecture is almost identical to the LLaVA.

Time Tokens. One distinguishing point is that this model utilizes SlowFast mechanism since it has to see every single frames of given video. Additionally, $T$ specialzed time token are added to the each video token chunks. The time tokens are like <1> to <T>, which greatly simplifies the time representation with LLM, enabling replyig with such time tokens.

SlowFast Visual Tokens. Similar to the previous approaches, this model adopts SlowFast mechanism, which samples low-resolution fast tokens frequently from the video and at the same time, high-resolution slow tokens more sparsely.

Reasoning Temporal Localization

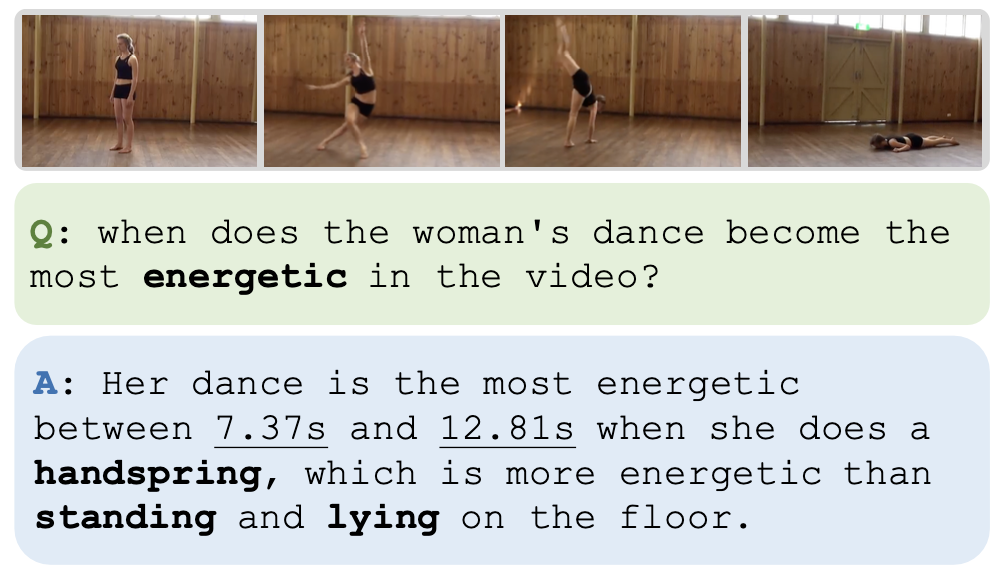

The concept of this newly introduced task is straightforward. It's kind of temporal grounding problem which requires reasoning ability to accurately ground it. For details, please refer Fig. 3.

The dataset was generated utilizing GPT-4 from ActivityNet Captions dataset. The total number of the training datset is 10,009 videos and 33,557 QA pairs, and 229 QA paris for 160 videos for evaluations.

Experiments

Discussion

References

Footnotes

'DL·ML > Paper' 카테고리의 다른 글

| Video Token Merging(VTM) (NeurIPS 2024, long video) (1) | 2025.01.24 |

|---|---|

| TemporalVQA (0) | 2025.01.22 |

| NExT-Chat (ICML 2024, MLLM for OD and Seg) (0) | 2025.01.22 |

| STVG (VidSTG, CVPR 2020) (0) | 2025.01.21 |

| LongVU (Long Video Understanding) (0) | 2025.01.20 |