LITA (ECCV 2024)

·

DL·ML/Paper

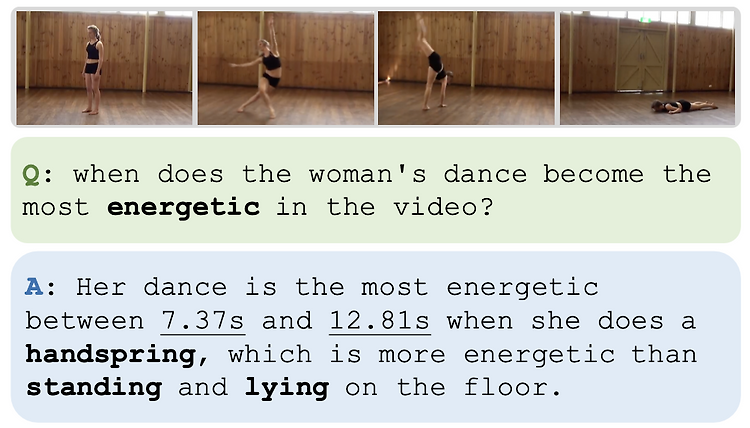

Abstracthttps://arxiv.org/pdf/2403.19046 Recent works often overlook the importance of temporal localizationThe key aspects that limit the temporal localization abilities are:time representationarchitecturedataHence, new architecture, LITA, is proposed in this paper which is capable of:leveraging time tokens to better represent time in videos handling SlowFast tokens to capture temporal informat..